This series has meandered down a long and winding path from basic

concepts to theory to practice. In this final post, I’m going to

show a lot of really boring graphs and charts to answer the big

question: What about performance?

Part 1 – Hyper-V storage fundamentals

Part 2 – Drive Combinations

Part 3 – Connectivity

Part 4 – Formatting and file systems

Part 5 – Practical Storage Designs

Part 6 – How To Connect Storage

Part 7 – Actual Storage Performance

I think what people really want to know is, out of all the various

ways storage can be configured, what’s going to provide the best

performance? I will, as always, caution against being that person with

an unhealthy obsession with performance. I’ve met too many individuals

who will stress over an extra millisecond or two of latency in a system

where no one will think anything is wrong unless the delay passes

several seconds. If you truly need ridiculously high storage speed and

you are not already a storage expert, hire one.

Introduction

What I’m going to do in this post is show you performance results

that were all taken from the exact same hardware configured in several

different ways. The test was configured exactly the same way each time.

What I want you to understand from that is that the numbers themselves

are largely irrelevant. The hardware that I used is not up to the

challenges of anything more than a small business environment, and by

small, I mean something like under twenty users. What’s important is how

the numbers do or do not compare against each other across the various

test types.

Materials and Methods

This section lists the hardware, software, and configurations used in the tests.Hardware

In the server used as the storage server, two Seagate ST3000DM001

drives were added to the defined configuration. These were placed into a

mirror Storage Spaces array.

Although it should not have impacted the test outcomes, both of the

Hyper-V systems were expanded so that their internal drives are in a

hardware RAID-1 configuration. No test was run from these drives.

The hardware switch used is the Netgear GS716T device indicated in the document, with firmware version 5.4.1.10.

This test used a virtual machine running Windows Server 2012 R2 Standard Edition in evaluation mode, build 6300.

Software

The virtual machine used in testing was running Windows Server 2012

R2 Standard Edition, evaluation build 9600.

The testing software used was IOMeter version 1.1.0 RC1.

The storage host is running Windows Storage Server 2012 R2 Standard Edition, same build and patch level as the guest.

The two Hyper-V hosts are running Hyper-V Server 2012 R2, same build and patch level as the guest.

No out-of-band hotfixes were applied to any system; only those available through Windows Updates were applied.

Configuration

The test virtual machine was a generation 1 machine assigned 2

virtual CPUs and 2GB of RAM. Its C: drive was a dynamically expanding

drive with a limit of 60 GB. This drive was placed on separate storage

from the test volume. The test volume was attached to the virtual SCSI

chain and set to 260 GB in size. It was dynamic in some tests but

converted to fixed for others. The type used will be reported in each

test result. Both disks were in the VHDX format.

The IOMeter test was configured as follows:

- Only one worker was allowed.

- The Maximum Disk Size was set to 65,536,000 on a disk with 4k sector size and 524,288,000 on disks with a 512b sector size; this results in a 250GB test file.

- A single Access Specification was designed and used: 16K; 75% Read; 50% Random

- The Test Setup was limited to a 30 minute run

- Results and configurations were saved on the virtual machine’s C: drive for tests run inside the VM

- All other settings were kept at default

The hosts were configured as follows:

- Onboard (Broadcom NetXtreme I) adapters were left strictly for management

- All other adapters in the Hyper-V nodes (4x Realtek) were fully converged in LACP with Dynamic load-balancing in most tests

- Some tests, indicated in the text, broke the teams so that each node had 2x Realtek in a Dynamic load-balancing configuration passing all traffic besides management and storage. 2x Realteks were assigned their own IPs and participated directly in the storage network.

- The Realtek adapters do not support VMQ or RSS

- The two Hyper-V nodes communicated with the storage server using 2 vNICs dedicated to storage communications on a specific VLAN.

- MPIO was enabled across the nodes’ storage NICs for iSCSI access. SMB multichannel was in effect for SMB tests. This was true in both vNIC and pNIC configurations. The MPIO method was the default of round-robin.

- The two other adapters in the storage node (2x Realtek) were assigned a distinct IP and placed into the VLAN dedicated to storage

- The two Hyper-V nodes were joined into a failover cluster

- All tests were conducted against data on the 3 TB Storage Space

- Between tests, data was moved as necessary to keep the test file/VHDX as close to the start of the physical drive as possible.

- No other data was on the disk beyond the MFT and other required Windows data.

- iSCSI target was provided by the built-in iSCSI Target of Windows Storage Server 2012 R2

- SMB 3.02 access was also provided by the built-in capability of Windows Storage Server 2012 R2

Configuration Notes

Hardware RAID-1 would have been preferred for the 3 TB Seagate

drives, but the onboard RAID array cannot work with disks that large. It

is possible that the extra CPU load negatively impacted the local test

in a way that would not have impacted any of the remote tests. There was

no way to control for that with the available hardware.

A truly scientific test would include more hardware variance than

this. The switch presents the major concern, because another switch was

not available to rule out any issues that this one might have.

Results

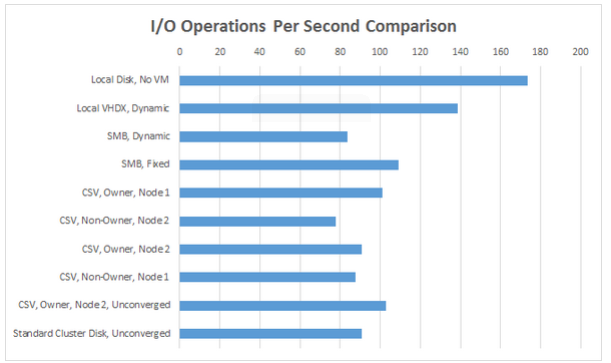

The following charts show a graphical representation of the results of each test.The first shows all results together in a single combined view:

The next three show the individual result sets.

Conclusion

Raw hardware access is always the fastest possible configuration.

However, higher-end hardware would not experience the wide range of

differences and would bring offloading technologies and optimizations

that would close the gap. Local access is, as expected, faster than

over-the-network access.

One thing the results are pretty unambiguous about is that dedicated

physical NICs work better for storage communications than virtual NICs

on a converged fabric. Again, higher-end hardware would reduce this

impact dramatically.

It also appears that running on a non-owner node has an effect.

Unfortunately, with a test group this small, there’s no way to be

certain that there wasn’t a network issue. This warrants further study.

The multichannel SMB configuration showed that it performs better

than an iSCSI configuration, all else being equal. This would also

eliminate the penalty imposed by running on a non-CSV owner node.

The difference between the dynamic and fixed SMB results was surprisingly wide. This disparity warrants further study.

No comments: